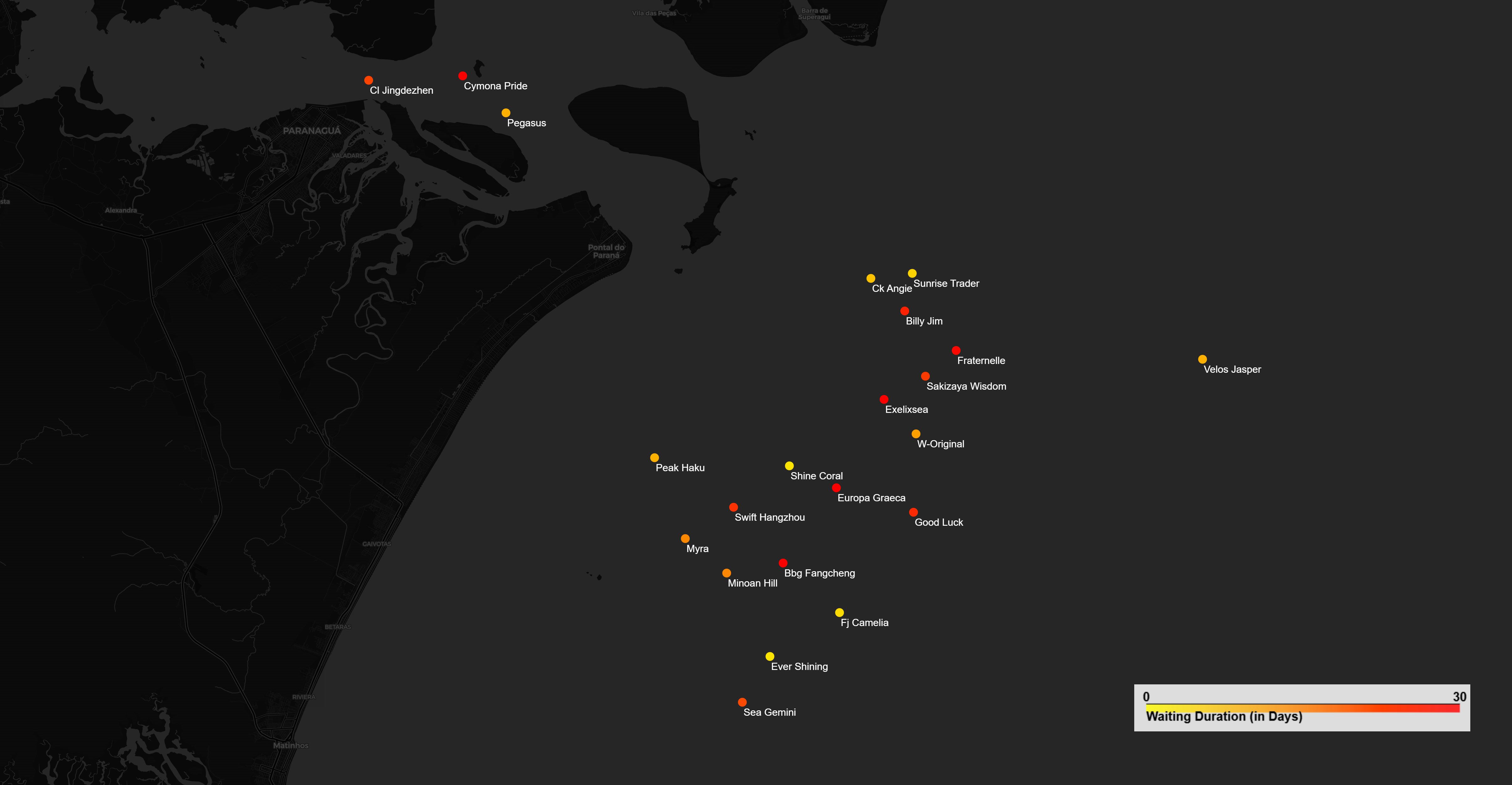

⚓ Congestion

Getting all Panamaxes (dwt between 67 000 & 95 000 tons) currently waiting at anchorage off Paranaguá.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

import requests

import pandas as pd

from datetime import datetime, timezone

# Base url

url = "https://apihub.axsmarine.com/dry/ship-status/current/v2?"

params = {

"page_size": "5000",

"vessel_dwt_from": "67000",

"vessel_dwt_to": "95000",

"polygon_ids": "9724,9725" # Paranagua Inner & Outer Anchorages

}

# Add token

token = "INSERT MY TOKEN"

headers = {

"Authorization": "Bearer {}".format(token)

}

# Add parameters

for name, value in params.items():

url = url + "&" + name + "=" + value

print("Url to query :", url)

response = requests.get(url, headers=headers).json()

total = pd.DataFrame(response['results'])

next_url = response['links']['next']

# Loop to get all results

while next_url is not None:

response = requests.get(next_url, headers=headers).json()

df = pd.DataFrame(response['results'])

next_url = response['links']['next']

total = pd.concat([total, df])

now = datetime.now(timezone.utc)

total['in_anchorage_since'] = pd.to_datetime(total['in_anchorage_since'])

total['anchorage_duration'] = (now - total['in_anchorage_since']).dt.days

total.to_csv("vessels_at_anchorage.csv", index=False)